Tech

'AI Will Figure Out Ways Of Manipulating People To Do What It Wants', Warns 'Godfather Of AI'

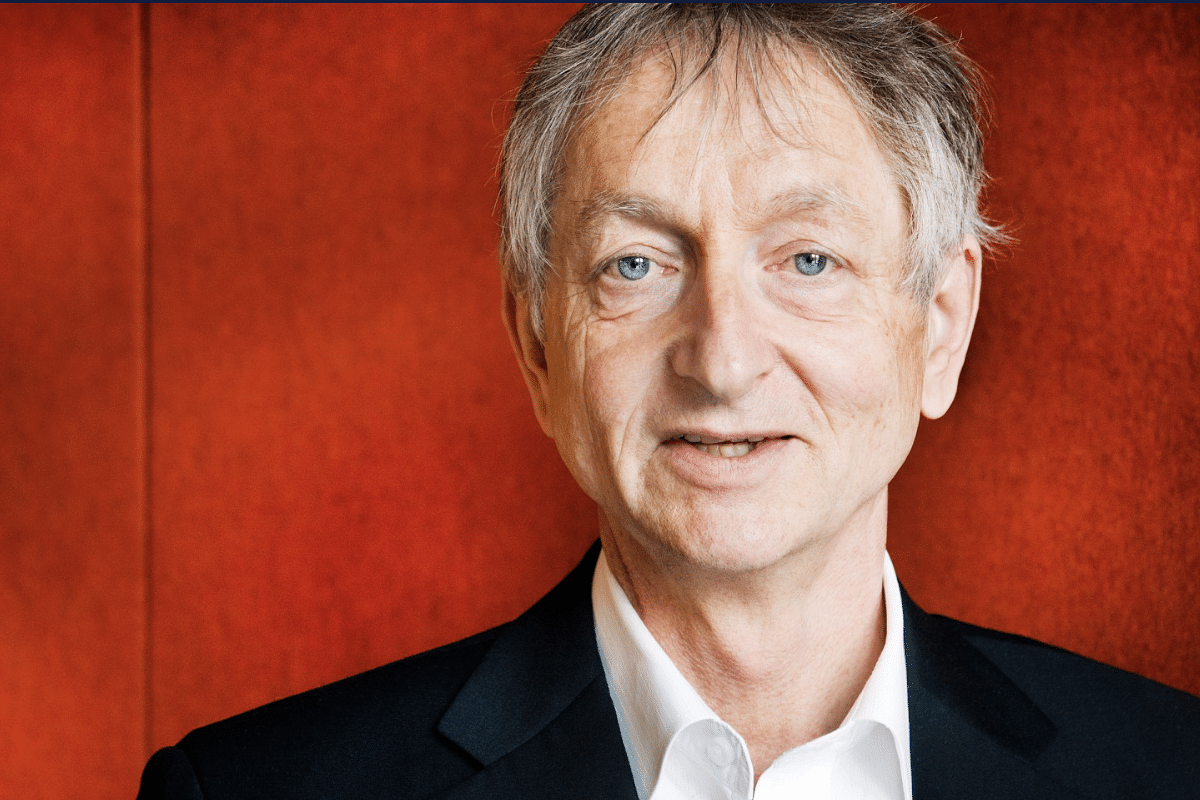

Geoffrey Hinton (Pic Via MIT Technology Review)

Geoffrey Hilton, who is sometimes referred to as 'Godfather of AI', has said that the artificial intelligence (AI) technology may figure out ways to manipulate people to do what it wants.

Hilton recently quit from tech giant Google to warn about dangers of the AI technology.

Hinton’s pioneering work on neural networks shaped artificial intelligence systems powering many of today’s products.

He worked part-time at Google for a decade on the tech giant’s AI development efforts.

He started working for the company in 2013, and while at Google, he designed machine learning algorithms.

In a tweet Monday, Hinton said he left Google so he could speak freely about the risks of AI, rather than because of a desire to criticise Google specifically.

“I left so that I could talk about the dangers of AI without considering how this impacts Google,” Hinton said in a tweet.

“Google has acted very responsibly," he added.

Hinton has called for people to figure out how to manage technology that could greatly empower a handful of governments or companies.

Hinton has said that the new generation of large language models - especially GPT-4, which OpenAI released in March - has made him realise that machines are on track to be a lot smarter than he thought they’d be.

“These things are totally different from us,” he told MIT Technology Review.

“Sometimes I think it’s as if aliens had landed and people haven’t realized because they speak very good English," Hilton added.

Hinton fears that these tools are capable of figuring out ways to manipulate or kill humans who aren’t prepared for the new technology.

“I have suddenly switched my views on whether these things are going to be more intelligent than us. I think they’re very close to it now and they will be much more intelligent than us in the future,” he said.

“How do we survive that?” Hilton asked.

In an interview with CNN, when asked about how could AI possibly figure out a way to manipulate or kill humans, Hilton said, "If it (AI) gets to be much smarter than us, it will be very good at manipulation because it would have learned that from us (humans)".

"And (there are) very few examples of a more intelligent thing being controlled by less intelligent thing, and it knows how to programme, so it will figure out ways of getting around restrictions we put on it," he said.

"It will figure out ways of manipulating people to do what it wants," Hilton added.

The 75-year-old computer scientist has divided his time between the University of Toronto and Google since 2013, when the tech giant acquired Hinton’s AI startup DNNresearch.

Hinton’s company was a spinout from his research group, which was doing cutting-edge work with machine learning for image recognition at the time. Google used that technology to boost photo search and more.

Hinton is best known for an algorithm called backpropagation, which he first proposed with two colleagues in the 1980s.

The technique, which allows artificial neural networks to learn, today underpins nearly all machine-learning models.

In a nutshell, backpropagation is a way to adjust the connections between artificial neurons over and over until a neural network produces the desired output.

Hinton believed that backpropagation mimicked how biological brains learn. He has been looking for even better approximations since, but he has never improved on it.

Introducing ElectionsHQ + 50 Ground Reports Project

The 2024 elections might seem easy to guess, but there are some important questions that shouldn't be missed.

Do freebies still sway voters? Do people prioritise infrastructure when voting? How will Punjab vote?

The answers to these questions provide great insights into where we, as a country, are headed in the years to come.

Swarajya is starting a project with an aim to do 50 solid ground stories and a smart commentary service on WhatsApp, a one-of-a-kind. We'd love your support during this election season.

Click below to contribute.

Latest