Tech

RISC-V: An Open-source Churn In Computational Hardware Electronics

Dr Santhosh Onkar

Nov 18, 2022, 06:00 AM | Updated Nov 18, 2022, 01:00 AM IST

Save & read from anywhere!

Bookmark stories for easy access on any device or the Swarajya app.

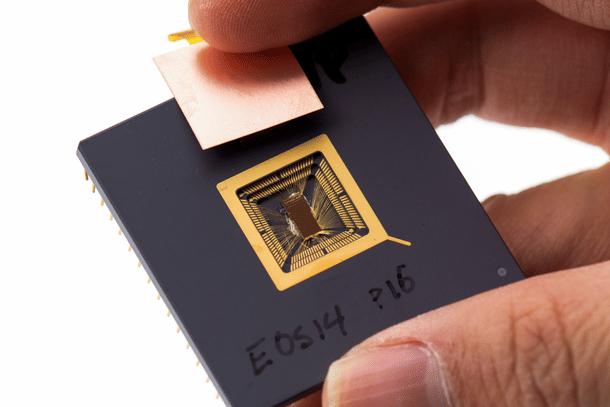

Central processing units (CPUs), or processors, are ubiquitous, be it in computers or phones, missiles or mopeds, toys or taxis.

CPUs are built on proprietary instruction set architectures (ISAs), mostly from Intel or Arm (over 90 per cent), temporarily parking aside the subtle difference in microprocessors and embedded microcontrollers.

This, in effect, means any business conceiving an electronic product that needs processing hardware must pay royalty and accept to buy what is availed by the proprietor.

And if the business is in a country that ends up on the wrong side of the geopolitical equation, it could potentially face sanctions, curtailing the country’s trade security in electronic hardware.

Enter RISC-V ISA, which is open-source; that is, royalty-free, and thus free of sanctions too.

Let’s take a quick tour of the RISC-V journey so far.

Specifically: what does it entail technologically, and what are its geopolitical implications for the Indian Industry if India aspires to be an ‘’actual design powerhouse?” How does the Indian design powerhouse road ahead look in the RISC-V context?

This series explores some of these questions.

Let’s begin with a brief technical background of a simplified computational stack.

TLDR Version Of A Computational Stack

Any (good) engineer who attempts to devise a solution (that is, a product) to a problem involving an electronic system must have some intuition on what constitutes a simplified compute technology stack (CTS).

Let me hazard an abstract analogy of CTS.

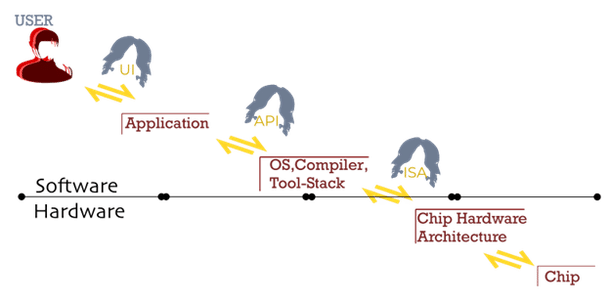

Think of it as a self-contained restaurant composed of a multitiered structure where you, the consumer or user, sits on one end of the heap consuming the product, while many layers of stack work the wheels descending from the garcon or waiter facing you, to the chef, to the farmer, to the grain or produce.

All these actors work at different layers, with defined interfaces between them, to bring to you a product that aims to ease your hunger.

Now, porting this idea to electronics industry jargon, the over-simplified broad abstraction layers are, namely (Figure 1): application, operating system (including tool sets like compilers and assemblers), chip hardware architecture, and finally, the silicon chip.

ISA: RISC-V, The New Teen On The Block

The ISA constitutes elements employed in communication at the hardware-software interface.

Just like our language dictionaries house things like communicable words (nouns, verbs, adjectives) and grammatical syntax and their meaning, similarly, ISA constitutes functional definitions of supported operations (add, multiply, and so on), modes (load, store), storage locations supported by hardware, and precise description of how to invoke and access hardware elements.

The ISA is a vital ingredient in defining how fast (or slow) a computation is executed and/or how much power it burns. The dominant ISAs include the x86 championed by Intel, the Arm ISA developed and licensed by Arm, and a relatively new (that is, a decade old) RISC-V ISA.

RISC-V ISA is the result of efforts made at the University of California, Berkeley, for a minimalistic architecture that could reduce the entry barrier for chip development.

Let’s understand the genesis and evolution of some of these design strategies that led to the development of various ISAs so as to better appreciate the impending disruption with RISC-V adoption.

Blurred CISC v/s RISC Boundaries

At the dawn of computing systems (1960s), computer architects jostled with the same two constraints that every problem-solver toils with day in and day out, namely, cost and speed.

Cost

The early computers used slow and expensive magnetic memories to hold the programme code.

Eventually, the invention of Random Access Memory (RAM) partly addressed the “speed” constraint and, in the process, gave birth to companies like Intel (1969), producing SRAM, and Toshiba (1985), producing DRAM.

Geopolitically, this led to a long-drawn competition between the United States and Japan in the DRAM market. The subsequent forays of many Asian competitors like Samsung and Hynix helped bring off-chip memory costs down. The memory was 800 times cheaper after 15 years.

Until the early 1990s, coding in the assembly language was the de facto choice to produce compact software; that is, the smaller the code, the smaller the memory needed to store the code; hence, the lower the cost.

Speed

The simplified equation that characterises the performance of a compute hardware is:

Execution time per programme = # Instructions/programme * average cycles per instruction * clock cycle

For a given system to run a piece of code faster — that is, take less time (the left hand side or LHS of the equation) — there are three primary knobs at play (terms on the right hand side or RHS).

The first option was to reduce the instructions per programme (the first contributor on the RHS of the equation) and you would have less complex software code, which needs less memory; hence both “cost and speed” constraints are met.

Thus began the genre of complex instruction set computer (CISC) systems pioneered by Intel. The strategy was to push complexity towards hardware to do more per instruction.

The ISA instruction count grew and programmes became complex.

The reduced instruction set computer (RISC) was a counter-movement championed by Berkeley, IBM, and Stanford groups.

IBM, in the 1980s, did extensive profiling of available ISAs and concluded that many instructions of ISAs were redundant and could be eliminated.

The RISC approach focused on the second term of the performance equation, which is reducing the cycles per instruction, by reducing the instruction set and adding other features like pipelining, simpler addressing modes, and increasing architecture register count.

But at the expense of longer programmes needing larger storage, since memory was relatively cheap.

The result was a flurry of RISC ISAs that claimed to be memory- and instruction-lite, also targeting a different genre of applications.

Nonetheless, the last two decades have seen increasing convergence between both CISC and RISC. Therefore, RISC v/s CISC has less significance beyond academic contemplation.

A holistic approach of analysing all aspects of ISA and its implementation, hardware architecture for a specific application space, and physical implementation becomes important to differentiate key performance indicators (KPIs).

Role Of RISC-V In The Prevailing Design Strategy

The generic corporate strategy in the electronics ecosystem has been that companies buy commoditised supplies adhering to standards from their suppliers, do value-addition in-house or in collaboration with partners, and sell the outcome to their end customers.

The first epochal marker of these strategies in play was the result of the likes of HP, IBM, and others battling the incumbent x86 architecture with their own alternatives and losing out to the Intel-AMD duo in the personal computer (PC) and server market.

Then came Arm, the challenger to the Intel-AMD duo, this time in collaboration with Apple, Qualcomm, and other fabless companies creating the second marker carving out its niche in the Internet of Things (IoT) and mobile applications.

The crucial thing to note is that both these churns resulted in the creation of large respective ecosystems, raising the barrier for new entrants.

As seen in the CTS, every player operating in a layer (Figure 1) expects a standard at the lower level to increase their flexibility with vendor choice, second sourcing, and cost reduction by competition at lower layers.

The application-layer players (for example, IBM, SAP, HP) drove standardisation at the lower layer (that is, the operating system layer) with the Linux revolution in the 1990s, with Microsoft Windows as a declared opponent.

In the mid-2000s, this effort spilled over into portable electronic devices. As Apple disrupted the cell phone market with touch phones running on iOS, Google bought Android Inc and partnered with various chipset makers (Qualcomm, Texas Instruments, and so on), cell phone makers (HTC, Motorola, Samsung, and so on), and wireless carriers (T-Mobile, Sprint, and so on), culminating in the Android OS and associated ecosystem.

RISC-V ISA is a similar attempt to create an open-source standard at a lower layer — that is, chip architecture — challenging the virtual duopoly of Arm and x86 and reducing the barrier to any new chip maker.

Unlike the x86 ISA jointly owned by Intel (32-bit) and AMD (64-bit) and Arm ISA licensed by Arm — both architectures are closed systems with their respective companies controlling the direction of development (rightfully so) — RISC-V is open-source.

The reader can make the crude analogy of RISC-V being the “Linux of chip architectures” (but with some qualifiers, which will be addressed in the future). All of its documentation and implementation code is publicly available to anyone who wants to adopt, modify, and build on with no licence fee.

The global RISC-V ecosystem is reaching critical mass to catapult its adoption in not only existing markets, but also in creating its own niche markets (example: artificial intelligence/machine learning).

Therefore, it is crucial that Indian mandarins understand the importance of these ecosystems and devise policies to create the necessary infrastructure to propel India’s self-sufficiency not only in the software stack but in the hardware stack as well.

In the next part of this multipart series, we explore the business models, geopolitics, key players of the global ecosystem, and the first commercial products on the market.

Using RISC-V as the litmus test, we attempt a reality check on Indian preparedness and a critical gaze at the self-declared Indian claims of becoming a “design powerhouse.”