Books

Innovation Nation: Machines Are Not Yet Omniscient

- Machines are getting pretty good at specialized tasks, but they are still rather bad at being generalists. There is considerable worry among people that there will be massive job-losses as more and more jobs get taken over by machines. But there have been several false dawns for Artificial Intelligence in the past, and it has always managed to disappoint us.

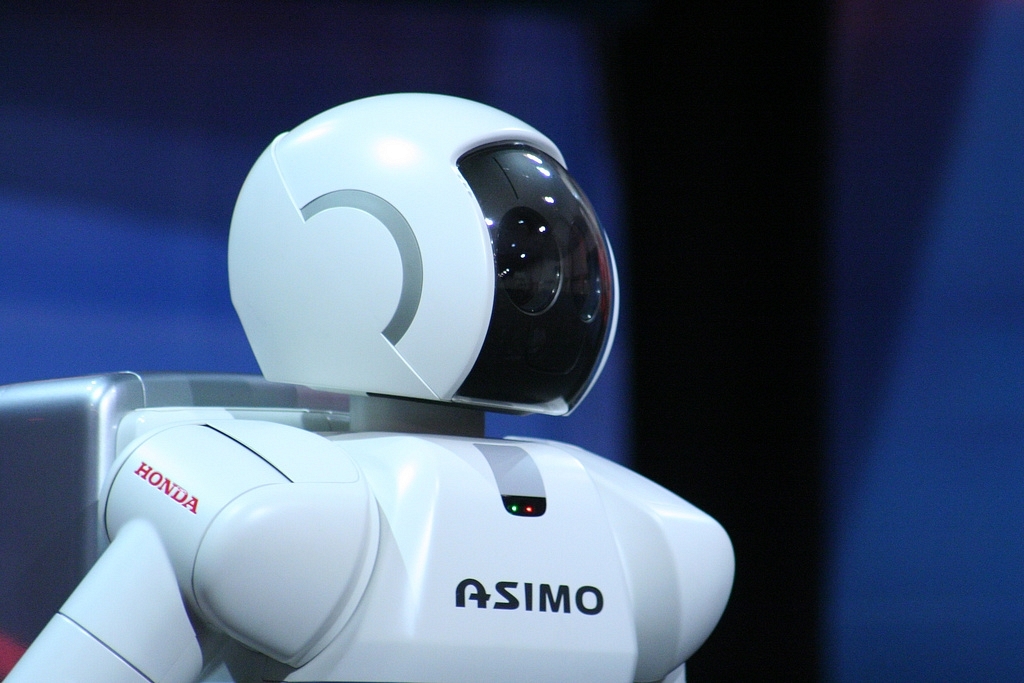

Asimo Robot, the man-made machine with a maiden name. He has a head.

Events in the recent past have given us opposing

perspectives on how well Artificial Intelligence (AI) is doing. The sum total of the

fallout from these is that the status quo

ante prevails: machines are getting pretty good at specialized tasks, but

they are still rather bad at being generalists. This may mean that it will be a

few more years yet before we are all out-competed for jobs by untiring,

unforgetting, unflappable computers that are also ruthlessly logical and

omniscient.

The most spectacular event, of course, was Google DeepMind’s dethroning of the reigning world champion in the game of Go, by a devastating margin on 4-1 in a five-game match, after taking the first three in a row. Go is supposed to be much harder than chess, and experts had not expected a machine to achieve this feat for another ten years. The ease with which ‘deep-learning’ algorithms came up with winning techniques was quite amazing.

Apparently, the algorithm did not use the ‘brute-force’ technique that was successfully used in chess, where computers crunched every possible move from a given point, and then backtracked to the best alternative. Instead, the enormity of the Go problem forced the algorithm designers to create a system that copies neural networks and learns mostly from experience and the hypotheses it creates from observation.

I understand that the self-learning algorithms watch millions of games, and are able to come up with entirely unexpected lines of play that human champions had hitherto not thought of. And significantly, in game 4, when the human player came up with a vastly different strategy than he had hitherto used (and which the machine, having studied his past history, was expecting), he was able to befuddle it into rather elementary errors.

Thus, it appears that AI is able, on the one hand, to discover new ideas, and on the other hand, it (still) lacks ‘common sense’. This was made all the more evident by the failure of a Microsoft experiment to let loose a bot named Tay into the world of Twitter, to interact with young users and learn from them. Hilariously, it turned out that Tay quickly became sexist, racist, homophobic and anti-Jew! Within a few hours of its release into the wild, Microsoft was forced to turn it off.

Well, Microsoft is infamous for releasing things before they are ready, expecting the public to debug them for free, but even taking that habit into account, this is an embarrassment. The fact that Twitter is rather full of trolls and unfettered discourse is something the programme fails to take into account, of course. No common sense, as I said.

The third incident was the first major crash of a Google self-driving car. Apparently the algorithm expected a bus driver to allow it to merge back into traffic, but the bus driver thought otherwise, leading to a fairly substantial collision, although nobody was injured. There are many people who are concerned about the legal aspects, including liability, of an autonomous device: and that’s not only cars, but also increasingly ubiquitous drones, some of which are able to self-navigate.

What this suggests is that fully self-driving cars may be farther off than we thought, and the incremental approach adopted by automobile manufacturers (of adding AI techniques to help human drivers) may be more sensible at least in the short run.

The performance of IBM’s Watson software has not quite lived up to expectations either. When it won the quiz-show ‘Jeopardy’ a couple of years ago, it was suggested that Watson would soon be a good assistant to people in many professions, because it could understand natural language with a fair degree of competence. Understanding human language has been a notoriously difficult task for machines, and Watson is the first that has demonstrated the ability to follow puns, innuendo, and other figures of speech.

Thus, for instance, Watson could be a good medical or legal assistant, able to talk to patients or clients and understand what they are saying. It would also be able to search the world’s databases quickly, so it would have access to the latest research or case precedents. And Watson will never be fatigued, have a bad hair day, or a fight with the boss. Thus it would be in effect the best employee ever, as per the hype. But it hasn’t quite reached that stage where it is, in fact, able to perform these tasks.

There is considerable worry among people (and they are not necessarily Luddites, either) that there will be massive job-losses as more and more jobs get taken over by machines. But there have been several false dawns for AI in the past, and it has always managed to disappoint us, at least so far. Way back in the 1980s, there was the feeling that AI was on a winning track. However, that didn’t lead to anything much, possibly because computer hardware wasn’t powerful enough.

There is no doubt that intelligent machines will eventually overtake humans, but that day is not quite here yet. Artificial intelligence, it appears, is always a decade or two away. Humans may manage to blunder on for a few more years.

Support Swarajya's 50 Ground Reports Project & Sponsor A Story

Every general election Swarajya does a 50 ground reports project.

Aimed only at serious readers and those who appreciate the nuances of political undercurrents, the project provides a sense of India's electoral landscape. As you know, these reports are produced after considerable investment of travel, time and effort on the ground.

This time too we've kicked off the project in style and have covered over 30 constituencies already. If you're someone who appreciates such work and have enjoyed our coverage please consider sponsoring a ground report for just Rs 2999 to Rs 19,999 - it goes a long way in helping us produce more quality reportage.

You can also back this project by becoming a subscriber for as little as Rs 999 - so do click on this links and choose a plan that suits you and back us.

Click below to contribute.

Latest