Technology

Expect Generative AI On Steroids With NVIDIA’s Launch Of Newest Chip

Karan Kamble

Mar 20, 2024, 12:33 PM | Updated 12:33 PM IST

Save & read from anywhere!

Bookmark stories for easy access on any device or the Swarajya app.

Chipmaker NVIDIA has unveiled the “Blackwell” platform. The new advancement in computing promises to revolutionise how organisations use artificial intelligence (AI).

It's safe to say that AI, and in particular generative AI, is the standout technology of our time. NVIDIA founder and chief executive officer (CEO) acknowledged this fact, highlighting the potential of Blackwell to launch generative AI to new, higher orbits.

“Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry,” he said, as per an NVIDIA press release.

The Blackwell platform enables real-time generative AI on trillion-parameter large language models (LLMs), offering up to 25 times less cost and energy consumption than its predecessor.

As per Nvidia, their system can deploy a 27-trillion-parameter model. For reference, OpenAI’s GPT-4 LLM has 1.7 trillion parameters.

The Blackwell GPU architecture boasts six transformative technologies for accelerated computing, which are poised to drive breakthroughs in various areas — data processing, engineering simulation, electronic design automation, computer-aided drug design, quantum computing, and generative AI.

Many big tech organisations — Amazon Web Services, Dell Technologies, Google, Meta, Microsoft, OpenAI, Oracle, Tesla, and xAI — are expected to adopt the Blackwell platform.

Naturally, the chiefs of all of those companies had very good things to say about the new Blackwell offering.

Elon Musk, CEO of Tesla and xAI, said: “There is currently nothing better than NVIDIA hardware for AI.”

Mark Zuckerberg, founder and CEO of Meta, said he is “looking forward to using NVIDIA's Blackwell to help train our open-source Llama models and build the next generation of Meta AI and consumer products.”

“Blackwell offers massive performance leaps,” according to OpenAI CEO Sam Altman, who added that it “will accelerate our ability to deliver leading-edge models.”

The name “Blackwell” comes from David Harold Blackwell, an expert mathematician in game theory and statistics. He was the first Black scholar inducted into the National Academy of Sciences.

The Blackwell system succeeds NVIDIA’s Hopper architecture, launched two years ago to become the industry gold standard. Though they are apart by only a couple of years, the superior successor represents a significant leap in AI computing.

Blackwell's B200 GPU delivers 20 petaflops of AI performance from a single GPU, a substantial increase over Hopper's maximum of 4 petaflops. This allows Blackwell to handle more complex AI tasks efficiently.

The B200 GPU also boasts 208 billion transistors, more than double the 80 billion transistors in Hopper's GPUs, indicating a significant enhancement in processing capabilities.

Blackwell features 192GB of HBM3e memory with 8 TB/s of bandwidth, offering a substantial increase in memory capacity and data transfer speeds compared to Hopper.

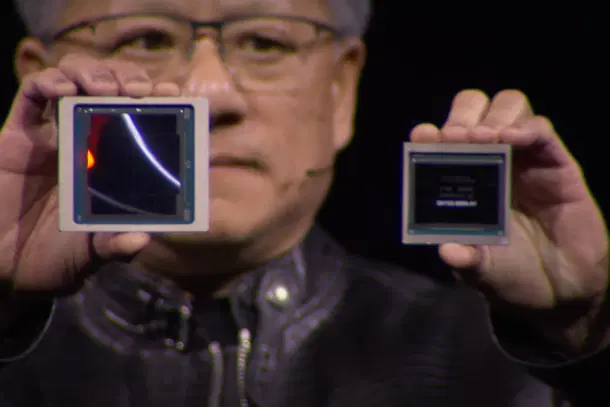

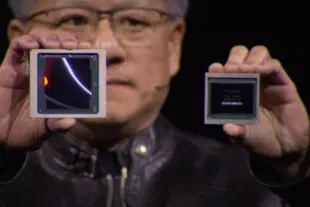

Notably, the B200 GPU is designed with a dual-die configuration, tightly coupled to function as one unified GPU.

The platform uses a high-speed NV-HBI connection operating at 10 TB/s to link the two chips in the dual-die configuration, ensuring seamless communication and optimal performance.

A new transformer engine is also incorporated. It automatically adjusts precision levels based on computational needs. This optimises processing speed and energy use without requiring manual programming adjustments, a feature not present in Hopper.

If the Hopper itself has been “the engine for the world’s AI infrastructure,” wonder what the Blackwell will be.

"When we were told that Blackwell's ambitions were beyond the limits of physics, the engineers said 'so what?' And so, this (Blackwell) is what happened," a proud Huang said at the NVIDIA GPU Technology Conference (GTC).

According to NVIDIA, while GPT-4 used about 8,000 Hopper GPUs and 15 megawatts of power for 90 days of training, the same AI training can now be done with only 2,000 Blackwell GPUs and 4 megawatts of power.

The GB200 Grace Blackwell Superchip, a key component of the Blackwell platform, apparently provides up to 30 times the performance increase compared to previous models, with reduced cost and energy consumption.

The platform offers 1.4 exaflops of AI performance and 30TB of fast memory, setting a new standard for AI computing.

The Blackwell platform will be available from various partners later this year, including AWS, Google Cloud, Microsoft Azure, Oracle Cloud Infrastructure, and other leading cloud service providers and software makers.

This new offering certainly marks a significant leap forward in AI computing, promising faster, more efficient, and more accessible AI capabilities for industries worldwide.

Karan Kamble writes on science and technology. He occasionally wears the hat of a video anchor for Swarajya's online video programmes.